I attended the World Usability Day Unconference facilitated by UXPA DC today at ByteCubed in Crystal City.

You could show up and volunteer to give a talk. Man, I really have been out of touch with the design community. It’s been a long time. I’ve been to UI events before, but I’ve never been to one focused on design ethics. It’s a perennial topic.

There were unconference talks, a networking break, and then a workshop facilitated by Kat Zhou.

THE KILLER APP. LITERALLY?

I shared the following with the group. Here’s the TLDR. I don’t want to kill anyone, directly or indirectly. I want to build cool, beautiful products and tools that help people make, manage, learn and build, and that leaves them feeling satisfied and accomplished when they’re done.

When I first joined a defense contractor — a small startup that was ultimately swallowed up — I was fascinated by the challenges. Interesting problems and a lot of low hanging fruit, and also mind numbing complexity. Our product was like Google Earth for the military. It was like a video game in a lot of ways. You track assets, points of interest, human intel, events, etc. Brilliant stuff.

As the product evolved, needs evolved. Data analysis was growing in importance, relatively, over awareness and planning. Who knows whom? Are there predictable patterns? Is there a correlation between people and events?

I love maps. I love the stories that data can tell.

Then it got real.

It’s all fun and games until someone asks if it’s possible to use your software for targeting. Oy gevalt. Life and death, literally.

I was uncomfortable. to be honest. This is where money, contracts, duty to the mission, patriotism, pragmatism, personal ethics, politics, and even philosophy come in to play.

What are the standards — what’s the error tolerance — for using life/death software? If you’re in a geographic region with spotty connectivity there’s going to be data lags. Would it be ethical to use outdated data to target people and places for destruction? Obviously, these are questions that governments have answered for themselves since militaries around the world use semi-autonomous and remote hardware to … neutralize designated enemy targets, i.e. to kill people and blow things up.

Personally, I have no answers. If you have issues working on such products, the defense industry may not be a good fit. Note: defense contractors also make an effort to contract in other domains. Government contractors might be a more accurate term.

Is it possible to request to be on a project or not be on a project based on your personal ethics? To keep it real, you’ll probably find yourself in the job market if you make waves. National security and making waves? Those are not two great tastes that taste great together.

I was much happier when we made modifications for corporate and government industries. Disaster Management is more my speed, to be honest. That was satisfying on multiple levels. That’s the kind of work I love to do.

Tangent: Captive users

Here’s an interesting fact about the military; from a design point of view, you have a captive audience. This doesn’t make for the most progressive designs. (Hardware can also be a limiting factor, as well as connectivity.)

Soldiers, for example, are ordered to use software. Sure, feedback is gathered and companies make efforts to provide solutions, but the solutions? Due to tight timelines and powerful clients, solutions are often quick fixes and patches, and NOT the state of the art sci-fi movie or gorgeously minimalist interfaces we all hope to produce.

Someone commented about Google and Microsoft employees protesting and influencing when they agreed to questionable collaborations with the government. That wouldn’t fly for a defense contractor, I assume. It’s the nature of the business. But you never know.

Of course, no matter what you design/build in whatever field it can always be used in ways you didn’t foresee. I’m sure when Facebook was created no one foresaw that it would be in the business of verifying news sources, hiring thousands of contractors to parse out hate speech, and arbitrating how much of a breast can be shown before it’s considered obscene.

UNCONFERENCE TALKS

Foundations of the Unknown

- A talk on facing the unknown and taking steps to a solution.

- Mindsets: Why, Learning, Collaboration, Results

- What is bodystorming?? I’ve never heard that term before.

- “Socializing” ideas is also a new phrase to me

Ethical Design

- Products can cause harm: addiction, abuse of users’ privacy

- Psychology of addiction: operant conditioning

- Ethical codes: Medicine, Architecture, User Experience(?)

- Advocate for the user; be mindful; evangelism

- Evil by Design by Chris Nodder

Building Diversity of Inclusion Through Digital Platforms

- AIG used a Slack channel to promote engagement

- 6 of 200 people would participate

- Agreeing to help turned into research, handholding, moderating, and creating an onboarding guide

- Made discussions more event based to increase participation and engagement x3

Spontaneous Session

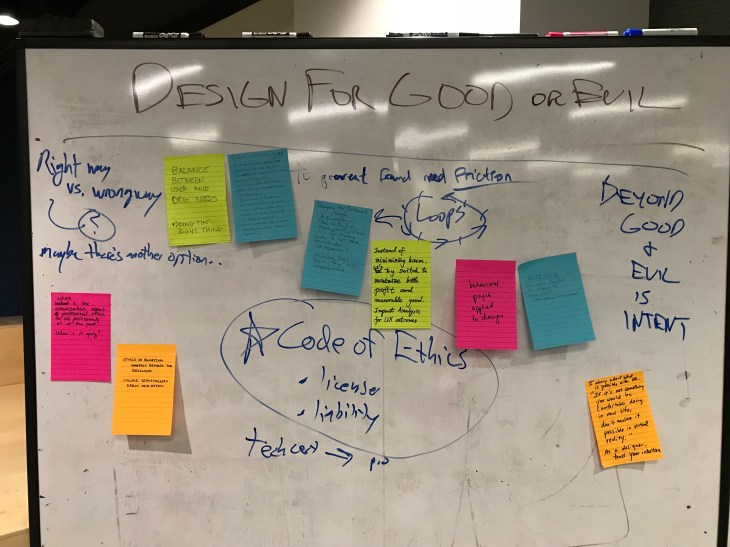

ETHICS AND DESIGN

UXPA Professional Code of Conduct

What would it mean for designers to be licensed?

- Code of ethics

- “Is there a UX pledge?”

- There’s an agile manifesto

- “Is there a UX pledge?”

- Who would govern the body?

- LIke the Bar Association

- They’re legally liable

This can be a very dry conversation, but it’s one I wasn’t even aware was happening to the extent that it is. It makes sense, though. We’re seeing the consequences of thoughtless design and dark UX.

Companies hellbent on views and engagement do so by hook or by crook. Sites meant to create a sense of community end up being cesspools of self-radicalization and echo chambers.

Audiences are often children being manipulated to engage, and often with unhealthy and unrealistic images and questionable products.

There are a lot of more benign examples, of course. Bad design, if not unethical design. Pages designed for distraction. Simple views turned into awful multi-page slideshows. Ads made to look like content and buttons designed to trick you into clicking ads. Hijacked or faux URLs leading to unsavory and age-inappropriate content.

It’s kind of a mess out there.

I’d love to hear what question designs you all have encountered.

WORKSHOP: DESIGN ETHICALLY

Imagine a company that gives loans to people without a credit history based on their social media presence, browser history, and the behavior of the people in their social networks. What are the pros and cons?

How do we build ethical algorithms, machine learning, and automation?

That was the gist of the exercise. I’ll forgo the details but here are the takeaways.

Brainstormed Solutions

- Transparency

- White box or open source algorithms

- Terms of agreement not designed to be obtuse/overwhelming

- Legal oversight

- Regulation

Takeaways

- “Nobody is neutral and doing nothing is not neutral.”

- “We need to reexamine how we measure success.”

- Designing for engagement

- But what about consideration for the user?

- Are the metrics harmful?

- “Inclusive + diverse teams are imperative.”

- “Ethics need to be emphasized in the boardroom.”

- “Product teams need to have a designated ethicist.”

And that’s all I have to say about that. Keep learning. Stay up.